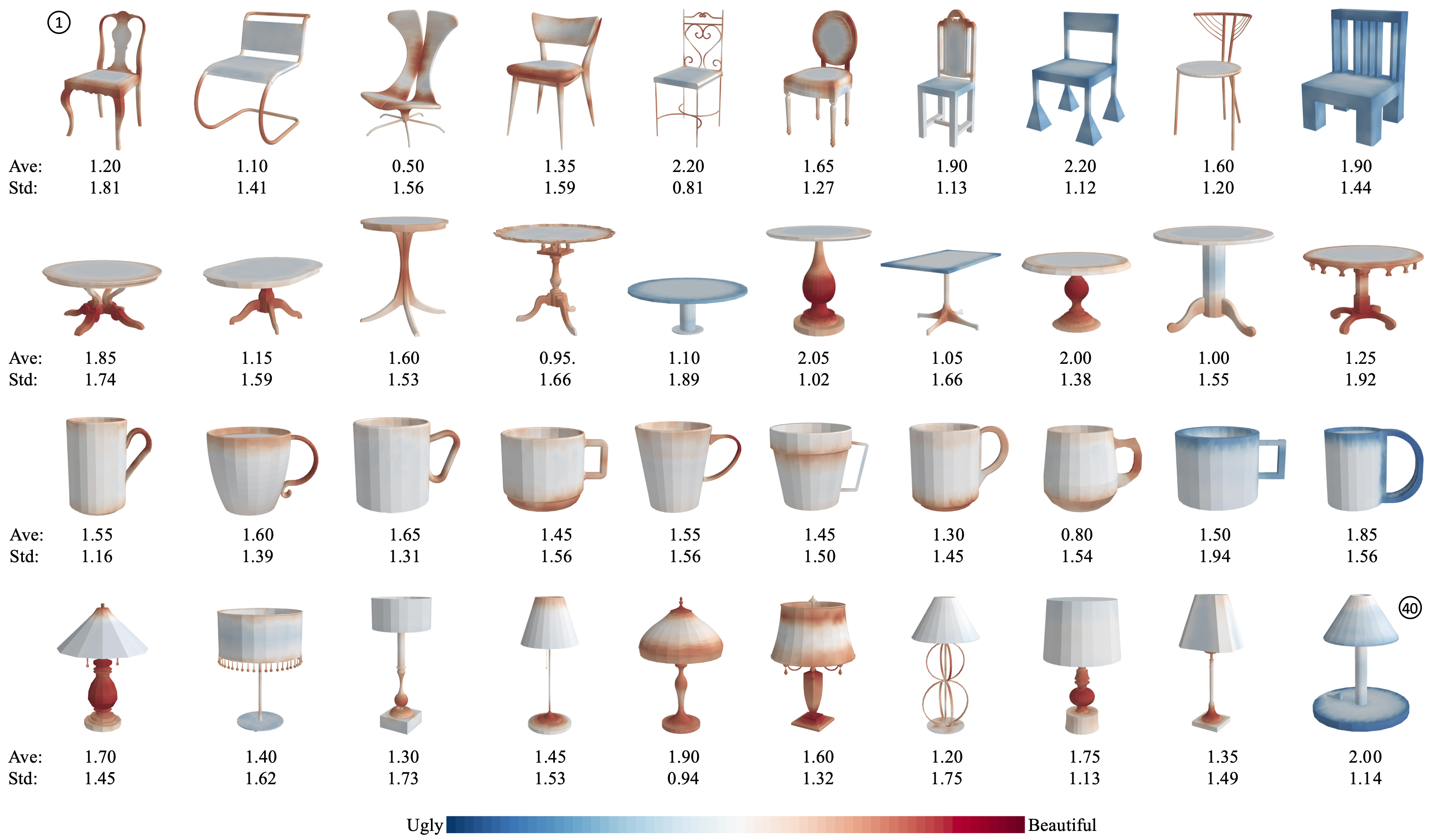

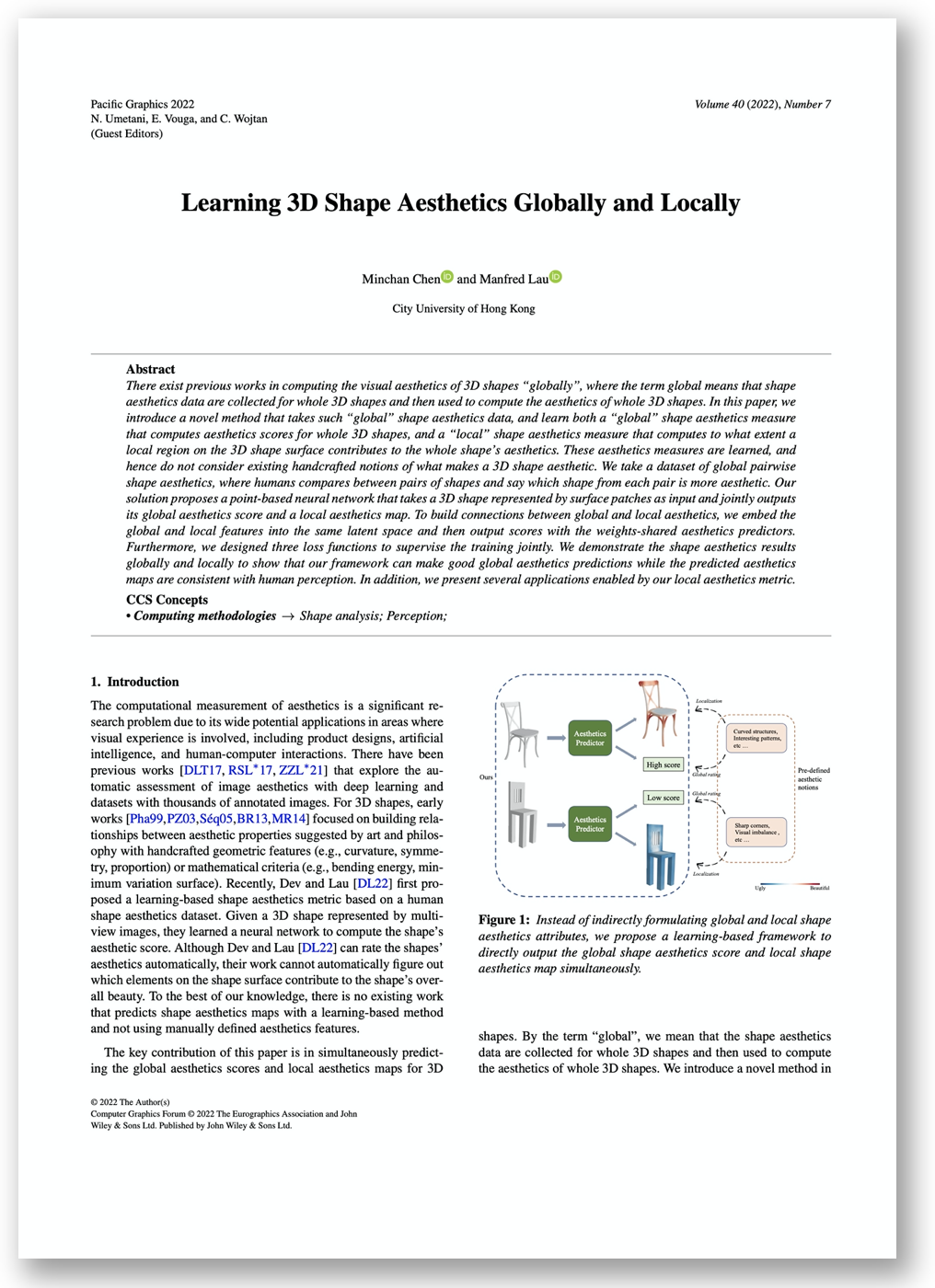

There exist previous works in computing the visual aesthetics of 3D shapes “globally”, where the term global means that shape aesthetics data are collected for whole 3D shapes and then used to compute the aesthetics of whole 3D shapes. In this paper, we introduce a novel method that takes such “global” shape aesthetics data, and learn both a “global” shape aesthetics measure that computes aesthetics scores for whole 3D shapes, and a “local” shape aesthetics measure that computes to what extent a local region on the 3D shape surface contributes to the whole shape’s aesthetics. These aesthetics measures are learned, and hence do not consider existing handcrafted notions of what makes a 3D shape aesthetic. We take a dataset of global pairwise shape aesthetics, where humans compares between pairs of shapes and say which shape from each pair is more aesthetic. Our solution proposes a point-based neural network that takes a 3D shape represented by surface patches as input and jointly outputs its global aesthetics score and a local aesthetics map. To build connections between global and local aesthetics, we embed the global and local features into the same latent space and then output scores with the weights-shared aesthetics predictors. Furthermore, we designed three loss functions to supervise the training jointly. We demonstrate the shape aesthetics results globally and locally to show that our framework can make good global aesthetics predictions while the predicted aesthetics maps are consistent with human perception. In addition, we present several applications enabled by our local aesthetics metric.